Reading the New York Times’ reporting on facial recognition in 2019 feels a little like watching a teenager attempt adulthood: confident, capable, and absolutely not ready for the responsibility. The technology is getting better far faster than the guardrails around it. And that gap – the one between capability and accountability – is where the trouble usually starts.

What stands out is how quickly facial recognition moved from sci-fi novelty to real-world deployment without society ever really agreeing on the rules. It’s impressive and unsettling at the same time. The engineer in me can’t help but admire the technical leap, but the human side keeps asking, “Should we maybe slow down before we accidentally turn every public space into a security checkpoint?”

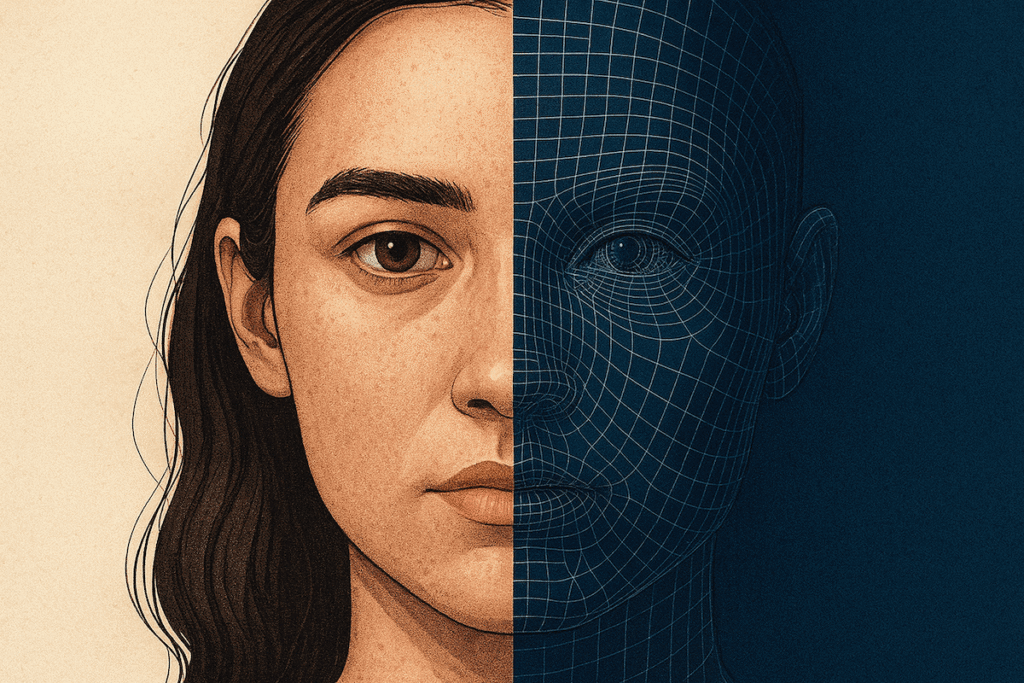

It’s easy to get distracted by accuracy rates and model performance. Harder is acknowledging that these systems interact with messy, complicated people – people who don’t all show up equally in the data. When technology sees some faces better than others, that’s not just a glitch. It’s a mirror held a little too close.

If the tech keeps maturing this fast, can our laws, ethics, and leadership keep up? And what happens when “good enough” AI ends up making decisions that deserve more than “good enough” oversight?

Related article: NY Times