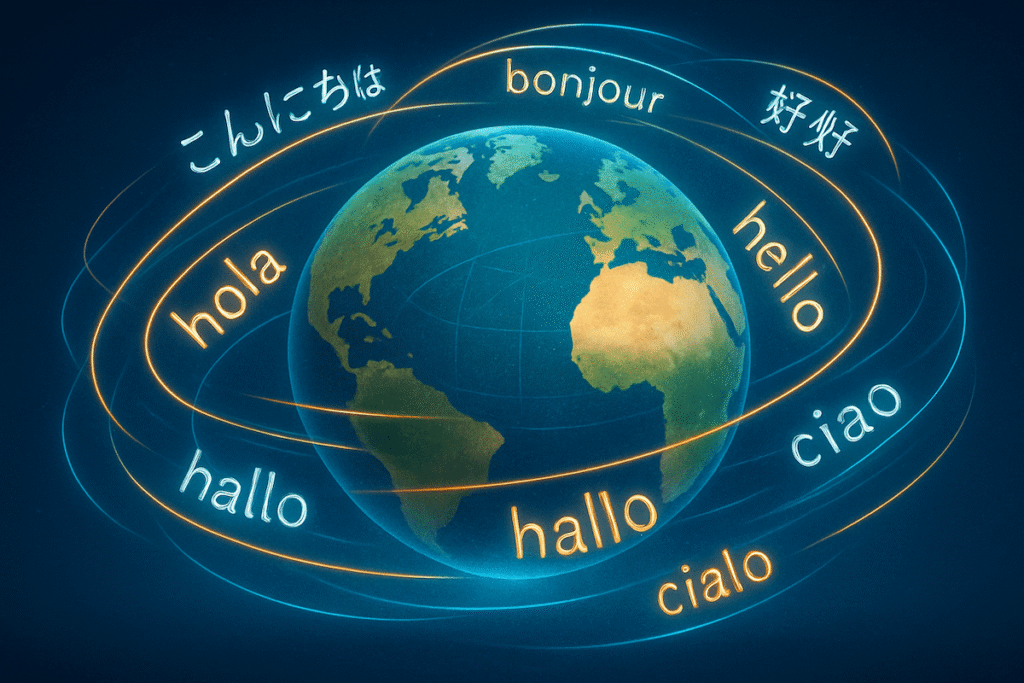

MIT Technology Review’s look at Google’s neural machine translation progress is a reminder of how quickly “impossible” things start feeling routine. A few years ago, machine translation sounded like a futuristic parlor trick. Suddenly, an algorithm is translating full sentences with context, tone, and the occasional moment of flair. It’s not perfect—but compared to the awkward phrase-salad we were used to, it feels like watching a toddler go from babbling to full paragraphs.

What’s striking is how natural the improvement looks from a distance. Most users don’t care about attention layers or sequence models. They care that the output makes sense. When technology crosses the threshold from “helpful but clunky” to “quietly reliable,” that’s when adoption takes off.

It also raises an interesting question about how much invisible AI we’re comfortable relying on. When translation gets good enough, we stop noticing the machine altogether. That’s powerful. And a little eerie.

If this is what AI can do with languages today, what happens when similar systems start shaping other everyday interactions? And at what point does “good enough” become “good enough that we stop thinking about it”?

Related article: MIT Technology Review